#2.8 Extracting Locations and Finishing up

# 반복될 수 있는 부분을 함수로 만들어 둔다.

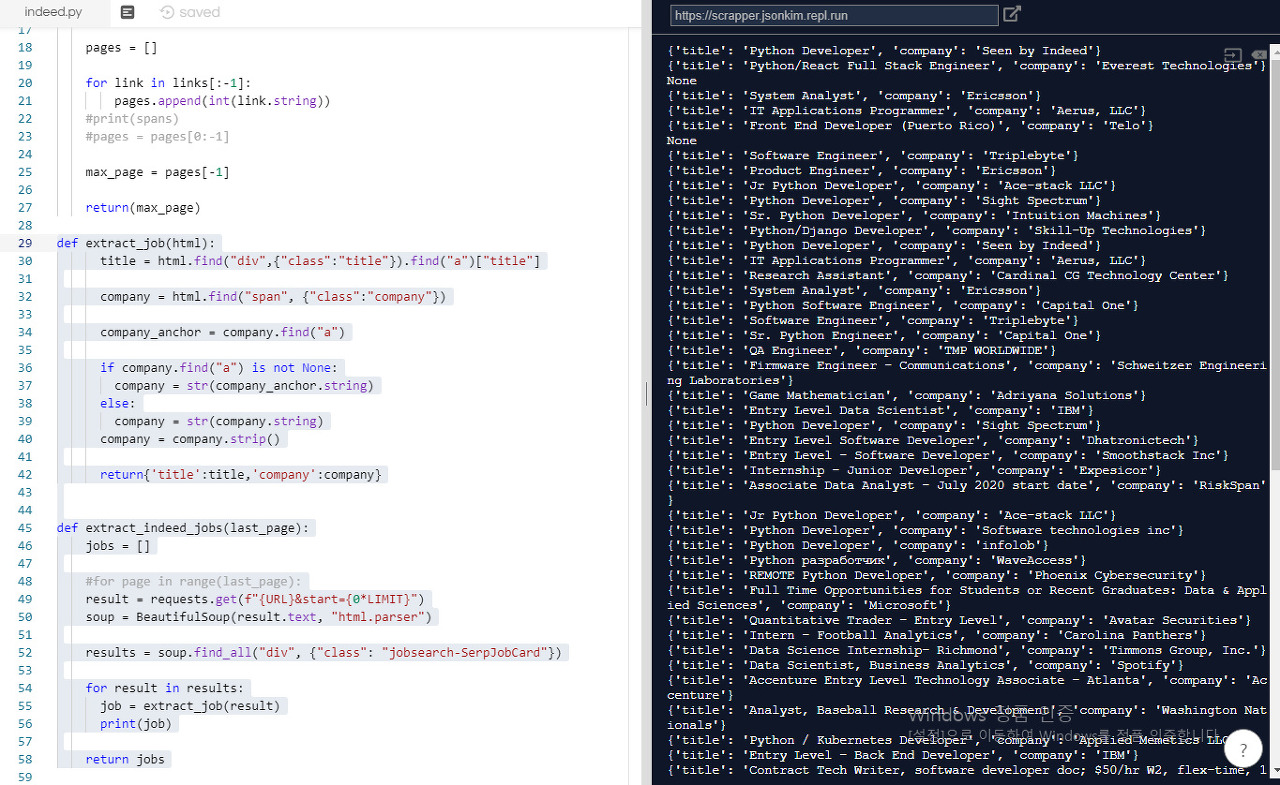

def extract_job(html): #이 함수를 만들었다. html이 result 이다.

title = html.find("div",{"class":"title"}).find("a")["title"]

company = html.find("span", {"class":"company"})

company_anchor = company.find("a")

if company.find("a") is not None:

company = str(company_anchor.string)

else:

company = str(company.string)

company = company.strip()

return{'title':title,'company':company}

def extract_indeed_jobs(last_page):

jobs = []

#for page in range(last_page):

result = requests.get(f"{URL}&start={0*LIMIT}")

soup = BeautifulSoup(result.text, "html.parser")

results = soup.find_all("div", {"class": "jobsearch-SerpJobCard"})

for result in results:

job = extract_job(result) #result가 html이다.

print(job)

return jobs

def extract_indeed_jobs(last_page):

jobs = []

#for page in range(last_page):

result = requests.get(f"{URL}&start={0*LIMIT}")

soup = BeautifulSoup(result.text, "html.parser")

results = soup.find_all("div", {"class": "jobsearch-SerpJobCard"})

for result in results:

job = extract_job(result)

jobs.append(job)

return jobs

main.py

from indeed import extract_indeed_pages, extract_indeed_jobs

last_indeed_page = extract_indeed_pages()

indeed_jpbs = extract_indeed_jobs(last_indeed_page)

print(indeed_jpbs)

#최종

import requests

from bs4 import BeautifulSoup

LIMIT = 50

URL = f"https://www.indeed.com/jobs?q=python&limit={LIMIT}"

def extract_indeed_pages():

result = requests.get(URL)

soup = BeautifulSoup(result.text, "html.parser")

pagination = soup.find("div", {"class": "pagination"})

links = pagination.find_all('a')

pages = []

for link in links[:-1]:

pages.append(int(link.string))

#print(spans)

#pages = pages[0:-1]

max_page = pages[-1]

return(max_page)

def extract_job(html):

title = html.find("div",{"class":"title"}).find("a")["title"]

company = html.find("span", {"class":"company"})

company_anchor = company.find("a")

if company.find("a") is not None:

company = str(company_anchor.string)

else:

company = str(company.string)

company = company.strip()

location = html.find("div",{"class":"recJobLoc"})["data-rc-loc"]

job_id = html["data-jk"]

return{

'title':title,

'company':company,

'location':location,

"link":f"https://www.indeed.com/viewjob?jk={job_id}"

}

def extract_indeed_jobs(last_page):

jobs = []

for page in range(last_page):

print(f"Scrapping pge{page}") #추가

result = requests.get(f"{URL}&start={page*LIMIT}")

soup = BeautifulSoup(result.text, "html.parser")

results = soup.find_all("div", {"class": "jobsearch-SerpJobCard"})

for result in results:

job = extract_job(result)

jobs.append(job)

return jobs

'Language > 파이썬' 카테고리의 다른 글

| Building a Job Scrapper(4) (0) | 2020.01.04 |

|---|---|

| Building a Job Scrapper(3) (0) | 2020.01.04 |

| Building a Job Scrapper(2) (0) | 2020.01.04 |

| Building a Job Scrapper (0) | 2020.01.02 |

| Data Analysis/데이터로 그래프그리기 (0) | 2018.01.05 |